NWebCrawler 1.0 Crack Full Version

In order for your web search operations to be fast, and efficient, services need to index all target locations. Surely enough this isn’t done manually, with dedicated applications like NWebCrawler performing web crawler operations in order to check, and download content from different sources to prepare them for indexing.

On the bright side of things, the application skips you the whole process of setting it up and allows you to run it from the moment download is done. What’s more, portability keeps the target PC’s registries intact, thus not affecting its stability. However, you need to check whether or not .NET Framework is on the computer you want to use it on.

Download NWebCrawler Crack

| Software developer |

Foam Liu

|

| Grade |

3.1

935

3.1

|

| Downloads count | 6994 |

| File size | < 1 MB |

| Systems | Windows All |

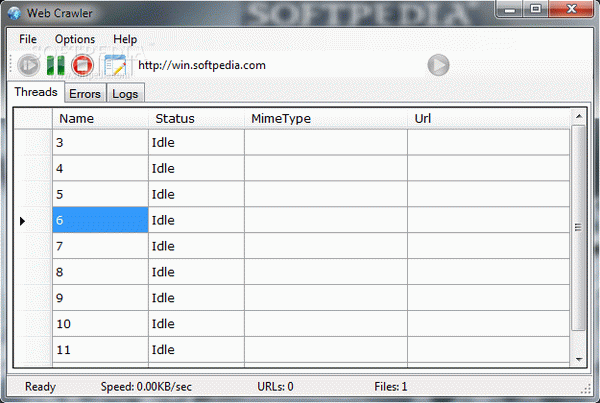

The visual design allows you to get the hang of things in a matter of seconds. Most of the space is used to show all detected items in an organized table with column headers for name, status, mime type, and URL, while several other tabs show encountered errors, as well as the overall log of operations.

To get started, you simply need to write down the target URL in the corresponding field, and hit the trigger. Once connected, the table fills in with all mentioned values, while the status bar provides info on operation status, speed, URLs, files, total size, and CPU usage.

You can tweak the process from the options menu. Connection options let you specify threads count, sleep time when queue is empty, thread sleep time between two connections, and connection timeout, all in seconds.

In case you’re only interested in specific file types, the filter tab lets you configure all allowed mimes. By default, all of them are enabled, but you can take the time to pick only those file formats you want to download, with the possibility to add more, and remove existing items.

To sum it up, NWebCrawler Serial comes as a lightweight solution to keep track of a website for content updates, download info based on custom filters, and automatically save files to use in optimizing search engines. It may feel a little rough around the edges, but it takes a little while to figure out, and is sure to be worth the effort.